Many of us find the concept of fate extremely seductive; it is admittedly difficult not to question if the stars have perfectly aligned when extraordinary coincidences occur. All of us have experienced dreaming about a long-forgotten friend only to run into him the very next day, but should we necessarily ascribe coincidences like this to a higher power? Coincidences of this type are usually a collision of two individual events, and any individual event usually has the potential to transpire in one of two possible ways - it either occurs, or it does not. Either the cloud above you looks like an elephant or it does not. Either the phone rings at 8:52 P.M. or it does not.

Sometimes, two individual events coincide in a way that strikes you as noteworthy – Patricia calls you moments after you thought of her, you dream about a long-forgotten friend only to run into him the very next day. This collision of events is where the term coincidence earns its keep. Notice that coincidences rely on two or more individual events colliding in a way that strikes you as transcendentally directed, perhaps by a some divine being or fate itself. We all have experienced a seemingly eerie combination of multiple variables coinciding unexpectedly -- you have a frightening dream about your mother and wake up to hear a voicemail that she has gone to the hospital, you mention in passing that you have never broken a bone, and awkwardly fall to your femur’s detriment later that day. But how often do occurrences of this type – collisions of two individual events – come whizzing past our face, only to go unnoticed? Moreover, how often do coincidences simply fail to occur?

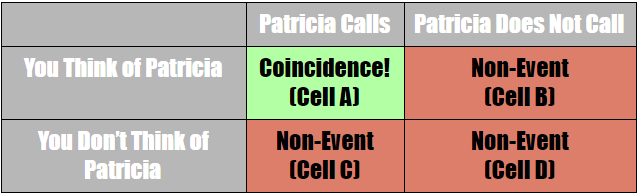

Here is where Cell A Bias, one’s tendency to ascribe authorship or divine intervention to random events, comes into play. (Cell A refers to the top left cell in a two by two matrix seen below, the same cell that we call coincidence). There are two individual events that could either occur or not. Let us consider the example of Patricia calling you moments after you think of her. In this case, the two events are you thinking of Patricia and her calling you. Either of the two events could either occur or fail to occur, which gives us four possible combinations. This can be diagrammed using a two by two matrix, as seen below.

As stated, most forms of Cell A Bias arise from our mistakenly interpreting two events as indicative of some underlying reason or purpose.

Since any combination besides Cell A involves a non-event, the resulting possibilities are: Cell A (Event), Cell B (Non-Event), Cell C (Non-Event), and Cell D (Non-Event), as shown in the matrix above. Notice there are three non-event cells and only one event cell. If you think of Patricia, but she does not call you moments later, you do not register that as a salient event. Likewise, should Patricia happen to call you, but without your prior consideration, no event is marked. Obviously, if neither event occurs -- the phone does not ring, nor you do not think of Patricia -- no connection is made. We need not debate over which combinations are more likely than others. There are, in fact, an infinite number of non-events happening right now. You are neither thinking of a particular loved one, nor are they calling you at this particular instant. Your father twists his ankle, but you failed to dream about it the previous night. However, your brain cannot keep tabs on every non-event, so cells B, C and D go unnoticed while the “perfect storm” brings events in Cell A to the forefront of your consciousness.

Evolutionarily, it actually makes sense for us to assign authorship to natural events that most likely have none. Our brain simply cannot hold memories of every time a coincidence failed to occur, so the only memories we do retain of those of seeming authorship. It is evolutionarily useful for us to make meaningful connections that allow our brains to retain more information, and insofar as complying with Cell A Bias allows for this, the process likely has survival value. If Cell A Bias allows us to make more neurologically meaningful connections about patterns in the world – whether true or not – this was likely evolutionarily advantageous. Therefore, we find coincidences of this sort to be meaningful simply because we fail to notice the trillions of non-events happening all around us.

In fact, simple probability and the law of large numbers practically guarantees that some coincidences must occur. It would be extraordinary if no events ever happened to coincide. In this context, Cell A Bias can tell us that we should be more skeptical of coincidences and avoid automatically ascribing them to a higher power or fate. Of course, that could be the reason why coincidences arise, but the Cell A Bias should make you skeptical the next time you notice a coincidence.