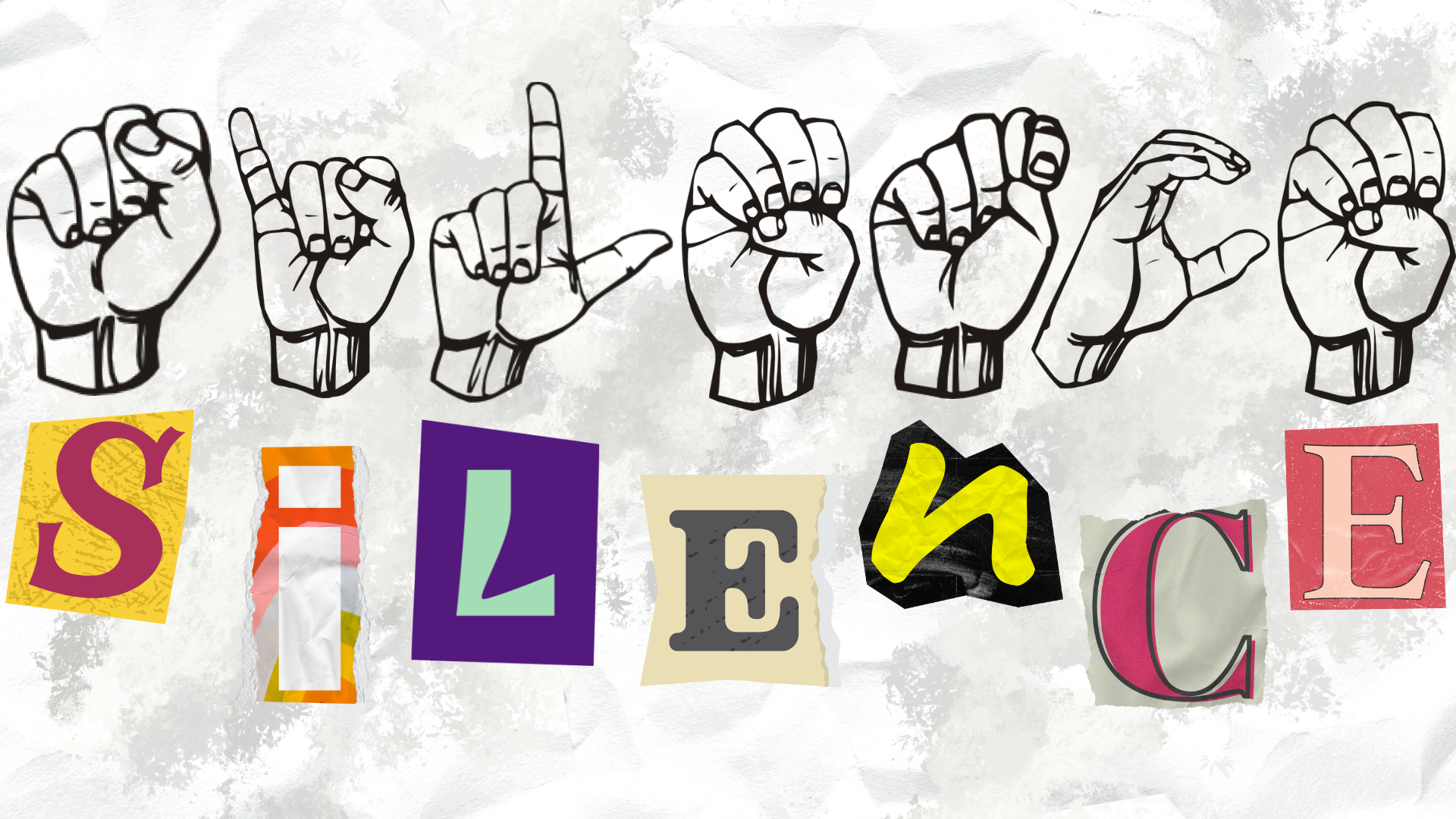

Spoken with Silence

By Charley Wan

Non-verbal does not mean non-language. We can show instead of tell, demonstrate instead of describe. Sign language is a unique instance where meaning can be conveyed through silence. The complex involvement of the hands, body, and brain has sparked multiple debates regarding the similarities and differences between the reception and production of spoken and unspoken languages like this one. Many studies have approached the unique aspects of unspoken language acquisition as well. Some may ask, “What about gestures? Do they count?” Through the lens of theoretical psychology and neuroscience, these ongoing debates may be settled.

Sign languages naturally appeared as individuals who were hard of hearing gathered and interacted within communities. The earliest mention of the existence of sign language came in the form of Plato’s Cratylus where the philosopher Socrates discussed how language would be communicated without the crude spoken word in the fifth century B.C. The first use of a manual alphabet system on the other hand can be attributed to a monk by the name of Saint Bede the Venerable in the seventh century to communicate among the religious. These truly deaf and hard of hearing individuals though initially faced stigma, as being unable to hear or speak was considered a sign of low intelligence and an inability to learn. However, as more standardized alphabets developed and deaf education became more prominent, sign language solidified itself as a novel linguistic phenomenon, having its own unique grammar, lexicon, and gained international recognition. This was shown by the publishing of the first book for sign language education by Juan Pablo Bonet in Spain during 1620. Indeed, there’s multiple notable sign-languages around the world now such as ASL (American Sign Language), BSL (British Sign Language), and CSL (Chinese Sign Language) just to name a few.

As a general preface to the subtleties of sign language, it aligns with spoken languages in two aspects: duality of patterning and recursion. Duality of patterning describes the characteristic of languages being composed of small, meaningless elements that, when combined, create larger components with meaning. In spoken languages, this could be understood as letters and how they become words that hold meaning when put together. Sign language has narrowed these small elements down to five categories: handshape, place of articulation, movement, and non-manual expression (features that don’t involve the hands like facial expressions). Once applied, there are numerous ways to string these five elements together and generate comprehensive “silent speech.”

So how are sign languages different then? One of many debates arises from how the brain receives words and signs, and how they are produced. Naturally, Broca’s and Wernicke’s area— the brain regions for spoken language production and comprehension respectively—were studied first. A recent 2022 review by Hayley Bree Caldwell emphasized disputes over lateralization and extra-brain region involvement in sign language comprehension. While it is true that sign language activates Broca’s and Wernicke’s areas, the degree and nature of activation depend on language modality. For example, a region in the left parietal lobe—the left supramarginal gyrus (SMG)—was active in signers during sign language comprehension compared to non-signers when viewing the same signs. A reason proposed for the increased SMG activity was its involvement in taking spatial elements of sign language and abstracting them into phonological meaning. Due to the nature of sign language requiring an extra step between the sensory stimulus and language and comprehension, more brain regions are recruited in its reception.

On the topic of sign language production, contribution of evidence from studies by Karen Emmorey and Mairead MacSweeny, featured in the review respectively, has also been found where the parietal lobe is engaged. It seems that while the left parietal lobe handles more spatial processing and general sign production, the right parietal lobe handles the transformation of signs in physical space to internal representations of signs. Studies have further narrowed it down to possible contributions from the superior parietal lobe and its role in using iconic sign location to produce classifier information (a category name for a group of nouns) for objects. Experiments found that when the left superior parietal lobe is inhibited using transcranial magnetic stimulation, participants had issues detecting and correcting spatial errors, while their fine motor production of signs was not hindered. As a result, the bilateral participation of parts of the parietal lobe point to its role in language processing beyond mere movements, something more like conceptual representations, which is a significant difference from what is observed in spoken languages.

Another debate arises in whether there is a difference between sign language and spoken language acquisition in the face of neural and linguistic development. Studies approached this debate by comparing the anatomy of congenitally deaf and hearing individuals’ brains. Due to sensory deprivation in deaf individuals during critical developmental periods, key neural changes were observed where people have decreased white matter in and around Heschl’s gyrus, the location of the primary auditory cortex, and also increased volume in the posterior insula cortex, both of which is not present is hearing individuals. The reasons for these brain changes could be an inherent decrease in connectivity with the auditory cortex in deaf individuals and the dependence on lip reading for speech comprehension. A study conducted by John Allen in 2013 also found that congenitally deaf individuals showed increased sizes (more gray matter volume) of the visual cortex compared to hearing subjects on the right side of the brain. They proposed that sensory compensation and plasticity in the absence of auditory input were the causes. Still, it could also be in combination with early exposure to sign language in infancy that accounts for this difference. Losing a key sensory modality such as hearing is somewhat of a setback for congenitally deaf individuals when trying to acquire spoken language, but it increases the ability of the brain to adapt and utilize more resources towards developing another method to derive language.

Finally, some debates have also been fought over the proximity of sign language to simple gesturing, but it seems like the two exist on more of a continuum than isolated islands. A 2014 paper written by Gary Morgan, a professor in psychology, sees gestures, especially co-speech gestures (gestures accompanying speech), to be holistic and not decomposable, while signs are overwhelmingly complex through their combinatorial structure in phonology and morphology. Arguments have been raised that the evolution of gestures is what led to more complex sign languages. As studies have shown with sign language acquisition in children, infants begin with “manual babbling,” which can be inferred as the most simplistic form of gestures. However, when they start developing their vocabulary, children assimilate observed sign patterns of similar handshapes and articulation, therefore shifting to a more detailed and formal sign language. As a parallel to spoken language development, you could think of the infant’s babbling of simple sounds as “mouth gestures.” Indeed, when hearing individuals learn to combine letters into complex sounds and combine those into words, they have shifted from the “gesture” level to the “language” level.

The wonders of sign language are still subject to scientific inquiry, no matter if it is grammar in linguistic studies, acquisition of the language in psychological studies, or the changes in brain matter in neurological studies. Sign language has not only elevated itself from mere gestures, but has also developed a diverse understanding across various cultures and ethnicities. While the debates are still ongoing, and we may not have a concrete answer on whether sign languages and spoken languages are clearly different, we can still examine this mystery.